Top 10 Low-Code AI Platform to Simplify AI Development (2025)

ML projects often hit reality when dealing with training pipelines, model versioning, and deployment workflows. Having worked on AI implementations across different tech stacks, I’ve evaluated the leading low-code platforms that claim to simplify this complexity.

Here’s my technical breakdown of how these tools actually perform in production, their real integration capabilities, and where they fall short.

No marketing fluff, just practical insights for developers building AI systems.

Understanding Low-Code AI Development

Low-code refers to development platforms that minimize manual coding through visual interfaces and pre-built components. These platforms automate infrastructure setup, pipeline management, and deployment workflows for AI development while exposing APIs for critical customizations.

You focus on core ML logic and business rules while the platform handles the underlying complexity – similar to how Django abstracts database operations but lets you define models and business logic.

Similar Ai tools: Best 15 Chatbots Software for Any Websites – Free & Paid

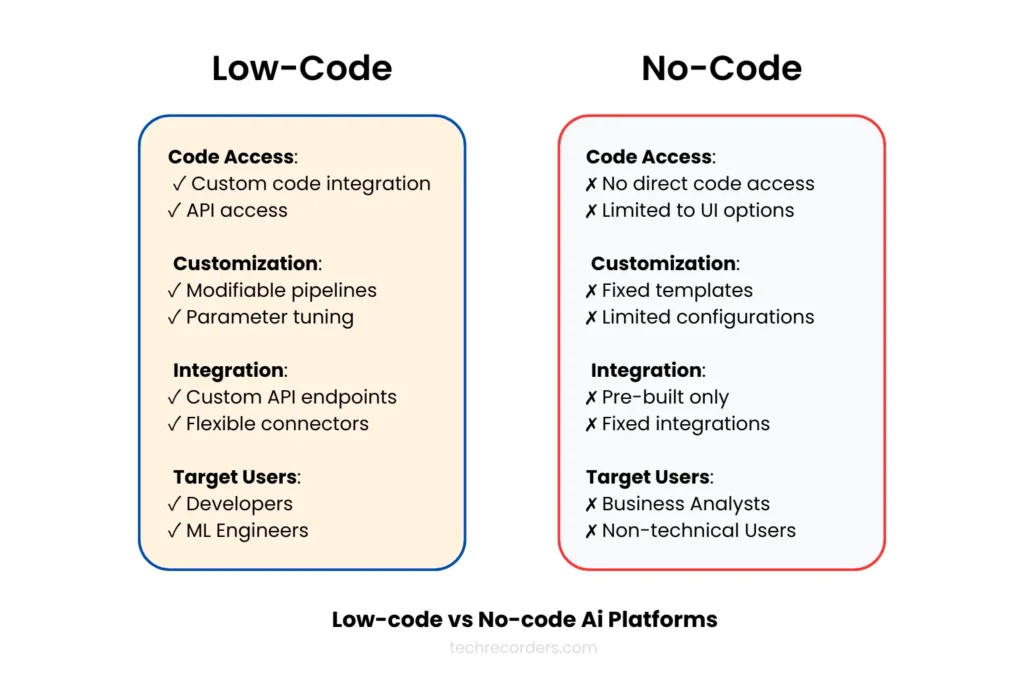

Difference between low-code and no-code

Low-code platforms let you inject custom code, modify training pipelines, and tune model parameters. You get APIs for integration and hooks for customization.

While, no-code platforms lock you into drag-drop interfaces and fixed templates – great for prototypes, limiting for production.

Benefits and limitations

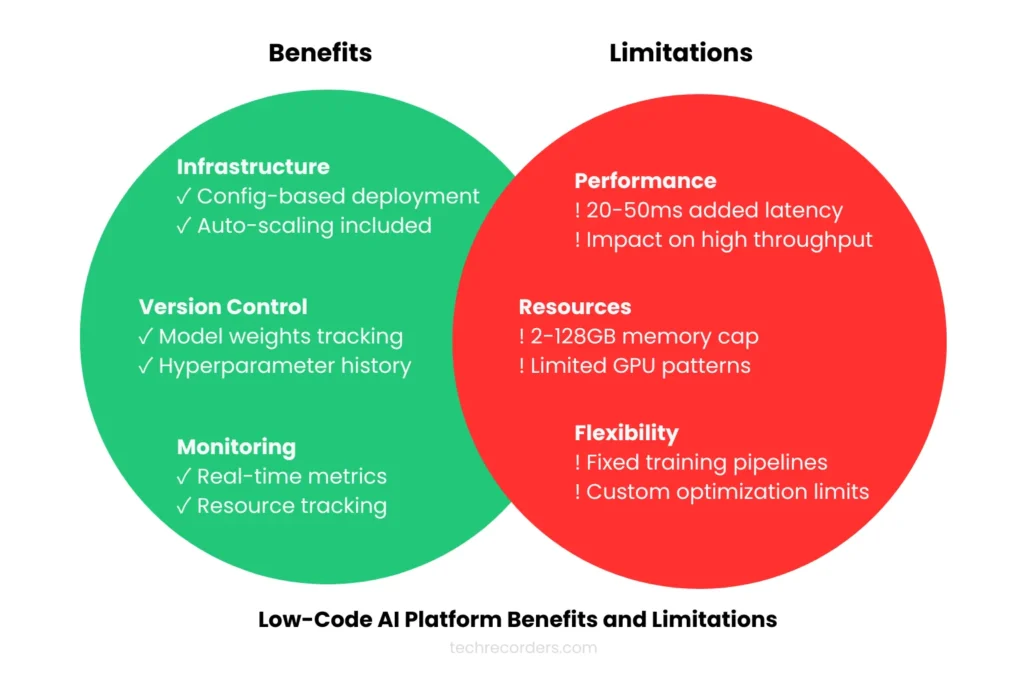

Low-code AI platforms introduce specific tradeoffs in your ML development workflow. Here’s what matters:

Benefit side: You get production-ready infrastructure through configuration instead of manual setup. Built-in versioning tracks your entire ML pipeline – from model weights to training data. Monitoring comes preconfigured for tracking model performance and resource usage.

Limitation side: The abstraction layer adds 20-50ms to inference times (critical for high-throughput systems). Memory caps at 2-128GB per instance limit large model deployments. Standard pipelines restrict custom optimizations you might need for cost-efficient training.

Selection Criteria for Evaluation

When choosing a low-code AI platform, focus on these critical factors:

1. Development Experience

Evaluate how quickly your team can start building. Check if the platform offers easy local development setup, reliable debugging tools, and clear deployment pipelines. Watch for framework conflicts and environment mismatches that could slow development.

2. Technical Capabilities

Your platform needs to balance convenience with control. Verify support for custom model architectures, training pipeline modifications, and resource management. Memory limits (typically 2-128GB per instance) often determine what you can build, regardless of other features.

3. Integration and Scaling

Look at API design, connector stability, and error handling patterns. Added latency should stay under 50ms to avoid system-wide performance issues. Cost structures usually combine compute resources, API calls, and storage – understand scaling thresholds where costs might spike unexpectedly.

4. Production Readiness

For reliable production deployments, examine security features (access control, audit logs), monitoring capabilities, and SLA terms. Test backup and recovery procedures. Multi-region support often costs extra but becomes essential as you scale.

Simple Evaluation Table:

| Feature | Must Have | Watch Out For |

|---|---|---|

| Development | Local testing environment, IDE support | Poor debugging tools, complex setup |

| Technical | Custom model support, pipeline control | Fixed architectures, memory limits |

| Integration | Stable APIs, error handling | High latency, poor connectors |

| Production | Security, monitoring, backups | Basic metrics, limited recovery options |

Top Low-Code AI Platform Analysis

1. Google Cloud AI Platform (Vertex AI)

Vertex AI focuses on three main approaches for low-code AI development:

- Vertex AI Studio: Rapid prototyping and testing of generative AI models

- AutoML: Custom model training without deep ML expertise

- Vertex AI Agent Builder: Creating AI agents and data-grounded applications

Development Environment: The platform provides two main development interfaces:

- Colab Enterprise

- Vertex AI Workbench Both offer full platform capabilities from data exploration to production deployment.

Available Models & Resources

- Model Garden: 150+ pre-trained models including:

- Gemini

- Stable Diffusion

- BERT

- T-5

2. Hardware Support: TPU/GPU acceleration options

3. Development Tools: Integrated testing and monitoring systems

Implementation Areas

Common low-code development scenarios include:

- Document summarization with generative AI

- Image processing using pre-trained models

- RAG-based chat applications

- Custom ML model training with minimal code

Integration Options

- Native GCP service connections

- Multiple programming language support

- Custom container deployment

- Standard API access points

Cost Structure

The platform uses consumption-based pricing with several components:

- Training: Charged by compute time in 30-second increments

- Prediction: Node-hour based pricing (e.g., e2-standard-2 at $0.0926/hour in US-west2)

- AutoML Models: Training at $3.465/node hour for image tasks

- API Usage: Generative AI services starting at $0.0001 per request

- Model Monitoring: $3.50/GB for analyzed data

Google Cloud offers new customers up to $300 in initial credits that can be applied toward any Google Cloud AI and ML services, including Vertex AI. Resource costs vary by region and machine type, so carefully evaluate your workload patterns when planning deployment.

2. Amazon SageMaker

SageMaker unifies ML development, analytics, and data management in a single platform. The Unified Studio provides developers a central environment for model development, generative AI work, and data processing, eliminating the need to switch between multiple tools.

The platform’s architecture centers on three main components:

- Unified Studio for development environment

- Lakehouse for data management

- Model development and deployment tools

Development Environment

You can access a comprehensive set of tools through the unified interface. This includes SQL analytics for data exploration, integrated processing frameworks, and direct access to foundation models through Amazon Bedrock. The platform supports both traditional ML model development and generative AI applications.

Data Management

One of SageMaker’s key strengths is its unified data access. Rather than dealing with isolated data silos, you can work with data across lakes, warehouses, and federated sources. Built-in governance through SageMaker Catalog handles security and access controls, making it practical for enterprise requirements.

Cost Structure

SageMaker follows AWS’s pay-as-you-go model. You’re charged based on the computing resources used for training and hosting, storage for notebooks and models, and API requests. The platform provides a free tier for initial testing, though production deployments need careful consideration of usage patterns and associated costs.

This infrastructure particularly suits teams needing integrated ML capabilities without managing multiple separate systems. However, consider your specific requirements for data processing and model deployment when evaluating it against alternatives.

3. Microsoft Azure Machine Learning

Azure Machine Learning delivers enterprise-grade ML infrastructure focusing on the complete development lifecycle. The platform emphasizes MLOps capabilities, automated training workflows, and responsible AI development – particularly valuable for teams requiring robust production deployments.

The service architecture comprises:

- ML Studio for end-to-end development workflow

- Automated ML for rapid model creation

- Managed endpoints for production deployment

- Feature store for reusable ML components

Development Environment

Azure ML Studio serves as your central workspace, handling everything from data preparation to model deployment. You can iterate data preparation on Apache Spark clusters, interoperable with Microsoft Fabric. The platform includes prompt flow features for language model workflows and a model catalog providing access to foundation models from Microsoft, OpenAI, Hugging Face, and others.

Infrastructure and Scaling

The platform leverages purpose-built AI infrastructure combining latest GPUs with InfiniBand networking. For production workloads, managed endpoints handle model deployment, metric logging, and safe rollouts. Built-in MLOps capabilities support CI/CD pipelines and automated workflows for enterprise-scale deployments.

Cost Management

Azure ML follows a consumption-based pricing model. You pay only for compute resources used during training or inference, with no extra cost for the platform itself. Teams can choose from various machine types, from general-purpose CPUs to specialized GPUs, allowing cost optimization based on workload requirements.

4. IBM Watson Studio

IBM Watson Studio differentiates itself with enterprise MLOps capabilities and deployment flexibility. The platform exists in two main deployment options: as part of IBM Cloud Pak for Data (self-managed) or as a service through IBM Cloud.

The platform combines:

- Open-source ML framework support (PyTorch, TensorFlow, scikit-learn)

- Multiple coding environments (Python, R, Scala)

- JupyterLab and Jupyter notebooks integration

- Visual modeling through SPSS Modeler

Development Environment

The workspace supports diverse development patterns through notebooks, CLIs, and visual tools. AutoAI capabilities automate pipeline creation and model selection while maintaining transparency. The platform includes built-in data preparation tools with a graphical flow editor for data cleansing and shaping operations.

MLOps and Monitoring

Watson Studio provides comprehensive model lifecycle management:

- Quality, fairness, and drift monitoring

- Customizable model metrics

- Automated model validation

- Production feedback-based retraining

- REST API deployment across clouds

Deployment Options

Two primary paths exist:

- Cloud Pak for Data: Licensed deployment for private/public clouds

- Cloud Pak for Data as a Service: Pay-as-you-go on IBM Cloud

Pricing follows enterprise software models, requiring direct consultation for specific costs as they vary based on deployment model and scale. A limited trial environment is available for evaluation.

It is best suited for enterprise teams needing powerful model governance and flexible deployment options. The platform particularly is good in regulated industries requiring detailed model risk management and bias monitoring.

5. H2O.ai Platform

H2O.ai uniquely includes generative and predictive AI capabilities while offering complete stack ownership. The platform supports on-premise, air-gapped, and cloud VPC deployments, giving developers full control over their AI infrastructure.

The platform integrates:

- AutoML for automated model development

- Document AI with multimodal processing

- Choice of 30+ LLMs (both proprietary and open-source)

- Kubernetes-based deployment infrastructure

Development Options

Developers can choose between proprietary LLMs (GPT-4, Claude) and open-source models (Mixtral, H2O Danube). The platform’s Danube series, trained on 6T tokens, provides efficient performance for lightweight tasks. All development tools support custom API integration and infrastructure control.

Production Capabilities

The platform is best in enterprise deployments, handling document processing, fraud detection, and automated support systems. With SOC2 Type 2 + HIPAA/HITECH compliance, it meets strict security requirements.

It can provide up to 25x cost reduction compared to token-based services, particularly valuable for large-scale implementations.

This solution particularly fits organizations needing complete control over their AI infrastructure while maintaining enterprise-grade capabilities. The fixed hardware costs versus token-based pricing make it attractive for high-volume production deployments.

6. DataRobot Platform

DataRobot provides an enterprise AI platform focusing on end-to-end ML lifecycle automation. The platform emphasizes rapid development and deployment while maintaining powerful governance controls.

The platform structure offers:

- No-code AI app development interface

- MLOps automation and monitoring

- Support for multiple infrastructure options (cloud, on-premise, VPC)

- Built-in governance controls

Development Experience

The platform supports various project types including binary classification, regression, time series, and geospatial analysis. You can create and configure AI-powered applications through a no-code interface, though with some limitations – feature transformations using exponentiation aren’t supported, and DataRobot-generated features can’t be used in applications.

Production Management

The platform includes practical production constraints worth noting:

- Organization limit of 200 applications (expandable through enterprise licensing)

- 10,000 point limit for Grid Search optimization

- Time series applications require specific time unit configurations

- Built-in prediction explanations for governance requirements

Implementation Scale

The platform claims significant production metrics:

- 83% faster deployment rates

- 100+ use cases in production

- 1.4B predictions secured daily

- Support for both predictive and generative AI workflows

It is best suited for enterprise teams needing rapid AI implementation with strong governance. Consider the application limits and feature constraints when planning large-scale deployments.

7. Altair RapidMiner

Altair RapidMiner enables data analytics and AI capabilities with an emphasis on enterprise scalability and security. The platform differentiates itself through its modular, open architecture and comprehensive knowledge graph capabilities.

The platform structure includes:

- AI Studio for model development and automation

- Graph Studio for enterprise knowledge graphs

- IoT Studio for building scalable applications

- Monarch for data preparation and blending

Development Experience

The platform supports multiple development approaches – visual, automated, and code-based. Data scientists can build ML workflows using automated tools while maintaining the ability to fine-tune with code. The Graph Lakehouse engine enables fast query execution against large datasets, which is particularly useful for enterprise-scale knowledge graphs.

Technical Implementation

Key capabilities include:

- Real-time data processing and streaming

- High-performance graph processing engine

- Support for hybrid deployments (cloud/on-premise)

- Built-in model explainability features

Platform Focus

It is best suited for enterprise teams needing to integrate AI into existing workflows. The platform is particularly good at handling semi-structured data and building scalable AI applications. Consider this option when requiring strong data fabric capabilities alongside AI development tools.

8. Obviously AI

Obviously AI includes automated ML capabilities with dedicated data science support, focusing on rapid model development without coding. It’s different for both no-code software and expert guidance for enterprise AI implementation.

The platform provides:

- Automated classification, regression, and time series models

- ETL and data preparation tools

- Custom LLM development and RAG models

- Advanced AI for optimization and anomaly detection

Development Environment

The platform supports rapid model development through its no-code interface, handling up to 1 billion rows of data. Users can deploy through REST APIs or interactive dashboards, while higher tiers include dedicated data scientists for complex requirements.

Technical Implementation

Important features include model code export (.pkl files), REST API integration, multi-tenant management, and unlimited retraining capabilities. The platform scales from basic predictive models to advanced AI applications with professional support.

Cost Structure

Pricing ranges from free tier (1.2M predictions) to enterprise plans:

- Basic: $999/mo (100M rows)

- Pro: $2,999/mo (250M rows)

- Ultimate: Custom pricing (1B+ rows)

9. MindsDB

MindsDB enables enterprises to build AI applications directly on their data infrastructure. The platform distinguishes itself by providing AGI capabilities while maintaining direct database integration, making it particularly valuable for complex data environments.

The platform gives you:

- Real-time AI processing engine

- Direct database and data warehouse connections

- Support for multiple deployment options

- Enterprise-grade security features

Development Environment

You can build AI applications and agents directly on their data sources, eliminating traditional data pipeline complexities. The platform’s federated query engine handles data sprawl across databases, warehouses, and SaaS applications.

Technical Implementation

Important features include real-time data processing, federated queries, and flexible deployment options (VPC, on-premise, cloud). The platform prioritizes enterprise security while maintaining scalability for production workloads.

It is best for enterprise teams needing sophisticated AI solutions with direct data integration. Particularly effective for organizations dealing with distributed data sources and requiring real-time AI processing capabilities.

10. Apple Create ML

Create ML is Apple’s approach to making machine learning development accessible. Rather than being a general-purpose low-code platform, it is good as a specialized tool for building ML models that integrate seamlessly with Apple devices and frameworks.

This provides:

- Visual model training interface

- Support for diverse ML tasks (image, video, text, sound, motion)

- Hardware-optimized performance

- Direct Core ML integration

Development Experience

Create ML simplifies ML development through an intuitive interface that handles complex tasks automatically. You can train models through a visual workspace or programmatically using Swift, with real-time feedback on training progress. It is particularly good in its ability to leverage Apple hardware for optimized training and inference.

Create ML Capabilities

The platform supports a comprehensive range of ML tasks:

- Image classification and object detection

- Action and sound classification

- Text analysis and natural language processing

- Tabular data processing

- Hand pose and motion detection

So, I would say it is good for developers to build AI features for Apple platforms. While specialized to the Apple ecosystem, it fairly reduces ML implementation complexity by handling data management, model optimization, and deployment.

Wrap Up

The bottom line on low-code AI platforms is this: they greatly speed up the less glamorous parts of ML – infrastructure, version control, deployment – but there’s a catch. You get increased inference latency (a big deal for high-throughput apps), model size limitations due to memory constraints, and potentially less flexibility for fine-tuning performance.

So, picking the right platform is more important, so carefully weigh these trade-offs.

No Comment! Be the first one.